What I Wish I Knew When Learning Haskell

Version 2.3

% Stephen Diehl % March 2016

Stephen Diehl (@smdiehl )

This is the fourth draft of this document.

License

This code and text are dedicated to the public domain. You can copy, modify, distribute and perform the work, even for commercial purposes, all without asking permission.

You may copy and paste any code here verbatim into your codebase, wiki, blog, book or Haskell musical production as you see fit. The Markdown and Haskell source is available on Github. Pull requests are always accepted for changes and additional content. This is a living document.

Changelog

2.4

2.3

- Stack

- Stackage

- ghcid

- Nix (Removed)

- Aeson (Updated)

- Language Extensions (Updated)

- Type Holes (Updated)

- Partial Type Signatures

- Pattern Synonyms (Updated)

- Unboxed Types ( Updated )

- Vim Integration ( Updated )

- Emacs Integration ( Updated )

- Strict Language Extension

- Injective Type Families

- Custom Type Errors

- Language Comparisons

- Recursive Do

- Applicative Do

- LiquidHaskell

- Cpp

- Minimal Pragma

- Typeclass Extensions

- ExtendedDefaultRules

- mmorph

- integer-gmp

- Static Pointers

- spoon

- monad-control

- monad-base

- postgresql-simple

- hedis

- happy/alex

- configurator

- string-conv

- resource-pool

- resourcet

- optparse-applicative

- hastache

- silently

- Mulitiline Strings

- git-embed

- Coercible

- -fdefer-type-errors

2.2

Sections that have had been added or seen large changes:

- Irrefutable Patterns

- Hackage

- Exhaustiveness

- Stacktraces

- Laziness

- Skolem Capture

- Foreign Function Pointers

- Attoparsec Parser

- Inline Cmm

- PrimMonad

- Specialization

- unbound-generics

- Editor Integration

- EKG

- Nix

- Haddock

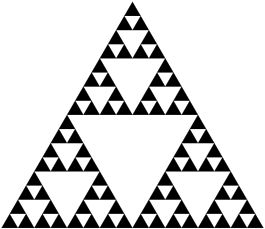

- Corecursion

- Category

- Arrows

- Bifunctors

- ExceptT

- hint / mueval

- Roles

- Higher Kinds

- Kind Polymorphism

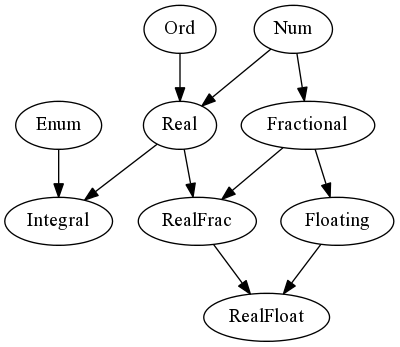

- Numeric Tower

- SAT Solvers

- Graph

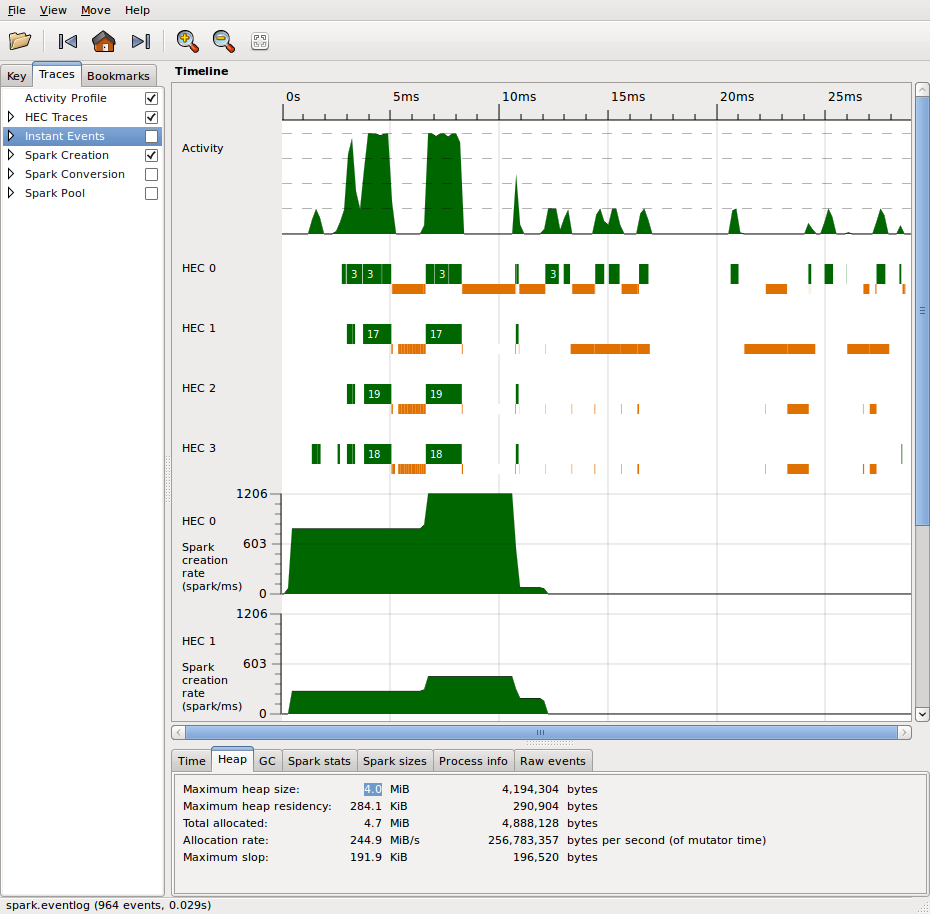

- Sparks

- Threadscope

- Generic Parsers

- GHC Block Diagram

- GHC Debug Flags

- Core

- Inliner

- Unboxed Types

- Runtime Memory Representation

- ghc-heapview

- STG

- Worker/Wrapper

- Z-Encoding

- Cmm

- Runtime Optimizations

- RTS Profiling

- Algebraic Relations

Basics

Cabal

Historically Cabal had a component known as cabal-install that has largely been replaced by Stack. The following use of Cabal sandboxes is left for historical reasons and can often be replaced by modern tools.

Cabal is the build system for Haskell.

For example, to install the parsec package to your system from Hackage, the upstream source of Haskell packages, invoke the install command:

$ cabal install parsec # latest version

$ cabal install parsec==3.1.5 # exact versionThe usual build invocation for Haskell packages is the following:

$ cabal get parsec # fetch source

$ cd parsec-3.1.5

$ cabal configure

$ cabal build

$ cabal installTo update the package index from Hackage, run:

$ cabal updateTo start a new Haskell project, run:

$ cabal init

$ cabal configureA .cabal file will be created with the configuration options for our new project.

The latest feature of cabal is the addition of Sandboxes, ( in cabal > 1.18 ) which are self contained environments of Haskell packages separate from the global package index stored in the ./.cabal-sandbox of our project's root. To create a new sandbox for our cabal project, run:

$ cabal sandbox initAdditionally, the sandbox can be torn down:

$ cabal sandbox deleteWhen in the working directory of a project with a sandbox that has a configuration already set up, invoking cabal commands alters the behaviour of cabal itself. For instance, the cabal install command will alter only the install to the local package index, not the global configuration.

To install the dependencies from the .cabal file into the newly created sandbox, run:

$ cabal install --only-dependenciesDependencies can also be built in parallel by passing -j<n> where n is the number of concurrent builds.

$ cabal install -j4 --only-dependenciesLet's look at an example .cabal file. There are two main entry points that any package may provide: a library and an executable. Multiple executables can be defined, but only one library. In addition, there is a special form of executable entry point Test-Suite, which defines an interface for invoking unit tests from cabal.

For a library, the exposed-modules field in the .cabal file indicates which modules within the package structure will be publicly visible when the package is installed. These modules are the user-facing APIs that we wish to expose to downstream consumers.

For an executable, the main-is field indicates the module that exports the main function running the executable logic of the application. Every module in the package must be listed in one of other-modules, exposed-modules or main-is fields.

name: mylibrary

version: 0.1

cabal-version: >= 1.10

author: Paul Atreides

license: MIT

license-file: LICENSE

synopsis: The code must flow.

category: Math

tested-with: GHC

build-type: Simple

library

exposed-modules:

Library.ExampleModule1

Library.ExampleModule2

build-depends:

base >= 4 && < 5

default-language: Haskell2010

ghc-options: -O2 -Wall -fwarn-tabs

executable "example"

build-depends:

base >= 4 && < 5,

mylibrary == 0.1

default-language: Haskell2010

main-is: Main.hs

Test-Suite test

type: exitcode-stdio-1.0

main-is: Test.hs

default-language: Haskell2010

build-depends:

base >= 4 && < 5,

mylibrary == 0.1To run an "executable" for a project under the cabal sandbox:

$ cabal run

$ cabal run <name> # when there are several executables in a projectTo load the "library" into a GHCi shell under cabal sandbox:

$ cabal repl

$ cabal repl <name>The <name> metavariable is either one of the executable or library declarations in the .cabal file and can optionally be disambiguated by the prefix exe:<name> or lib:<name> respectively.

To build the package locally into the ./dist/build folder, execute the build command:

$ cabal buildTo run the tests, our package must itself be reconfigured with the --enable-tests and the build-depends options. The Test-Suite must be installed manually, if not already present.

$ cabal install --only-dependencies --enable-tests

$ cabal configure --enable-tests

$ cabal test

$ cabal test <name>Moreover, arbitrary shell commands can be invoked with the GHC environmental variables set up for the sandbox. Quite common is to invoke a new shell with this command such that the ghc and ghci commands use the sandbox. ( They don't by default, which is a common source of frustration. ).

$ cabal exec

$ cabal exec sh # launch a shell with GHC sandbox path set.The haddock documentation can be generated for the local project by executing the haddock command. The documentation will be built to the ./dist folder.

$ cabal haddockWhen we're finally ready to upload to Hackage ( presuming we have a Hackage account set up ), then we can build the tarball and upload with the following commands:

$ cabal sdist

$ cabal upload dist/mylibrary-0.1.tar.gzSometimes you'd also like to add a library from a local project into a sandbox. In this case, run the add-source command to bring the library into the sandbox from a local directory:

$ cabal sandbox add-source /path/to/projectThe current state of a sandbox can be frozen with all current package constraints enumerated:

$ cabal freezeThis will create a file cabal.config with the constraint set.

constraints: mtl ==2.2.1,

text ==1.1.1.3,

transformers ==0.4.1.0Using the cabal repl and cabal run commands is preferable, but sometimes we'd like to manually perform their equivalents at the shell. Several useful aliases rely on shell directory expansion to find the package database in the current working directory and launch GHC with the appropriate flags:

alias ghc-sandbox="ghc -no-user-package-db -package-db .cabal-sandbox/*-packages.conf.d"

alias ghci-sandbox="ghci -no-user-package-db -package-db .cabal-sandbox/*-packages.conf.d"

alias runhaskell-sandbox="runhaskell -no-user-package-db -package-db .cabal-sandbox/*-packages.conf.d"There is also a zsh script to show the sandbox status of the current working directory in our shell:

function cabal_sandbox_info() {

cabal_files=(*.cabal(N))

if [ $#cabal_files -gt 0 ]; then

if [ -f cabal.sandbox.config ]; then

echo "%{$fg[green]%}sandboxed%{$reset_color%}"

else

echo "%{$fg[red]%}not sandboxed%{$reset_color%}"

fi

fi

}

RPROMPT="\$(cabal_sandbox_info) $RPROMPT"The cabal configuration is stored in $HOME/.cabal/config and contains various options including credential information for Hackage upload. One addition to configuration is to completely disallow the installation of packages outside of sandboxes to prevent accidental collisions.

-- Don't allow global install of packages.

require-sandbox: TrueA library can also be compiled with runtime profiling information enabled. More on this is discussed in the section on Concurrency and Profiling.

library-profiling: TrueAnother common flag to enable is documentation which forces the local build of Haddock documentation, which can be useful for offline reference. On a Linux filesystem these are built to the /usr/share/doc/ghc-doc/html/libraries/ directory.

documentation: TrueIf GHC is currently installed, the documentation for the Prelude and Base libraries should be available at this local link:

/usr/share/doc/ghc-doc/html/libraries/index.html

See:

Stack

Stack is a new approach to Haskell package structure that emerged in 2015. Instead of using a rolling build like cabal-install, stack breaks up sets of packages into release blocks that guarantee internal compatibility between sets of packages. The package solver for stack uses a different, more robust strategy for resolving dependencies than cabal-install has historically used.

Contrary to much misinformation, Stack does not replace Cabal as the build system and uses it under the hood. Stack simply streamlines integration with third-party packages and the resolution of their dependencies.

Install

To install stack on Ubuntu Linux, run:

sudo apt-key adv --keyserver keyserver.ubuntu.com --recv-keys 575159689BEFB442 # get fp complete key

echo 'deb http://download.fpcomplete.com/ubuntu trusty main'|sudo tee /etc/apt/sources.list.d/fpco.list # add appropriate source repo

sudo apt-get update && sudo apt-get install stack -yFor other operating systems, see the official install directions.

Usage

Once stack is installed, it is possible to setup a build environment on top of your existing project's cabal file by running:

stack initAn example stack.yaml file for GHC 7.10.3 would look like:

resolver: lts-6.4

flags: {}

extra-package-dbs: []

packages: []

extra-deps: []Most of the common libraries used in everyday development are already in the Stackage repository. The extra-deps field can be used to add Hackage dependencies that are not in the Stackage repository. They are specified by the package and the version key. For instance, the zenc package could be added to the stack build:

extra-deps:

- zenc-0.1.1The stack command can be used to install packages and executables into either the current build environment or the global environment. For example, the following command installs the executable for hlint, a popular linting tool for Haskell, and places it in the PATH:

$ stack install hlintTo check the set of dependencies, run:

$ stack list-dependenciesJust as with cabal, the build and debug process can be orchestrated using stack commands:

$ stack build # Build a cabal target

$ stack repl # Launch ghci

$ stack ghc # Invoke the standalone compiler in stack environment

$ stack exec bash # Execute a shell command with the stack GHC environment variables

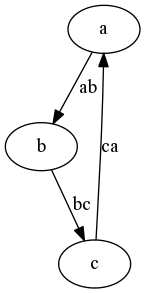

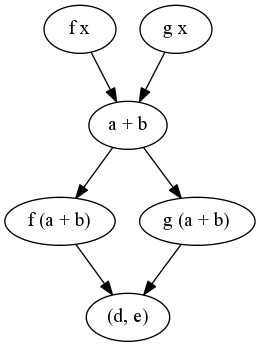

$ stack build --file-watch # Build on every filesystem changeTo visualize the dependency graph, use the dot command piped first into graphviz, then piped again into your favorite image viewer:

$ stack dot --external | dot -Tpng | feh -Flags

Enabling GHC compiler flags grants the user more control in detecting common code errors. The most frequently used flags are:

| Flag | Description |

|---|---|

| -fwarn-tabs | Emit warnings of tabs instead of spaces in the source code |

| -fwarn-unused-imports | Warn about libraries imported without being used |

| -fwarn-name-shadowing | Warn on duplicate names in nested bindings |

| -fwarn-incomplete-uni-patterns | Emit warnings for incomplete patterns in lambdas or pattern bindings |

| -fwarn-incomplete-patterns | Warn on non-exhaustive patterns |

| -fwarn-overlapping-patterns | Warn on pattern matching branches that overlap |

| -fwarn-incomplete-record-updates | Warn when records are not instantiated with all fields |

| -fdefer-type-errors | Turn type errors into warnings |

| -fwarn-missing-signatures | Warn about toplevel missing type signatures |

| -fwarn-monomorphism-restriction | Warn when the monomorphism restriction is applied implicitly |

| -fwarn-orphans | Warn on orphan typeclass instances |

| -fforce-recomp | Force recompilation regardless of timestamp |

| -fno-code | Omit code generation, just parse and typecheck |

| -fobject-code | Generate object code |

Like most compilers, GHC takes the -Wall flag to enable all warnings. However, a few of the enabled warnings are highly verbose. For example, -fwarn-unused-do-bind and -fwarn-unused-matches typically would not correspond to errors or failures.

Any of these flags can be added to the ghc-options section of a project's .cabal file. For example:

library mylib

ghc-options:

-fwarn-tabs

-fwarn-unused-imports

-fwarn-missing-signatures

-fwarn-name-shadowing

-fwarn-incomplete-patternsThe flags described above are simply the most useful. See the official reference for the complete set of GHC's supported flags.

For information on debugging GHC internals, see the commentary on GHC internals.

Hackage

Hackage is the upstream source of Free and/or Open Source Haskell packages. With Haskell's continuing evolution, Hackage has become many things to developers, but there seem to be two dominant philosophies of uploaded libraries.

Reusable Code / Building Blocks

In the first philosophy, libraries exist as reliable, community-supported building blocks for constructing higher level functionality on top of a common, stable edifice. In development communities where this method is the dominant philosophy, the author(s) of libraries have written them as a means of packaging up their understanding of a problem domain so that others can build on their understanding and expertise.

A Staging Area / Request for Comments

In contrast to the previous method of packaging, a common philosophy in the Haskell community is that Hackage is a place to upload experimental libraries as a means of getting community feedback and making the code publicly available. Library author(s) often rationalize putting these kind of libraries up undocumented, often without indication of what the library actually does, by simply stating that they intend to tear the code down and rewrite it later. This approach unfortunately means a lot of Hackage namespace has become polluted with dead-end, bit-rotting code. Sometimes packages are also uploaded purely for internal use within an organisation, to accompany a paper, or just to integrate with the cabal build system. These packages are often left undocumented as well.

For developers coming to Haskell from other language ecosystems that favor the former philsophy (e.g., Python, Javascript, Ruby), seeing thousands of libraries without the slightest hint of documentation or description of purpose can be unnerving. It is an open question whether the current cultural state of Hackage is sustainable in light of these philsophical differences.

Needless to say, there is a lot of very low-quality Haskell code and documentation out there today, so being conservative in library assessment is a necessary skill. That said, there are also quite a few phenomenal libraries on Hackage that are highly curated by many people.

As a general rule, if the Haddock documentation for the library does not have a minimal worked example, it is usually safe to assume that it is an RFC-style library and probably should be avoided in production-grade code.

Similarly, if the library predates the text library (released circa 2007), it probably should be avoided in production code. The way we write Haskell has changed drastically since the early days.

GHCi

GHCi is the interactive shell for the GHC compiler. GHCi is where we will spend most of our time in every day development.

| Command | Shortcut | Action |

|---|---|---|

:reload |

:r |

Code reload |

:type |

:t |

Type inspection |

:kind |

:k |

Kind inspection |

:info |

:i |

Information |

:print |

:p |

Print the expression |

:edit |

:e |

Load file in system editor |

:load |

:l |

Set the active Main module in the REPL |

:add |

:ad |

Load a file into the REPL namespace |

:browse |

:bro |

Browse all available symbols in the REPL namespace |

The introspection commands are an essential part of debugging and interacting with Haskell code:

λ: :type 3

3 :: Num a => aλ: :kind Either

Either :: * -> * -> *λ: :info Functor

class Functor f where

fmap :: (a -> b) -> f a -> f b

(<$) :: a -> f b -> f a

-- Defined in `GHC.Base'

...λ: :i (:)

data [] a = ... | a : [a] -- Defined in `GHC.Types'

infixr 5 :Querying the current state of the global environment in the shell is also possible. For example, to view module-level bindings and types in GHCi, run:

λ: :browse

λ: :show bindingsExamining module-level imports, execute:

λ: :show imports

import Prelude -- implicit

import Data.Eq

import Control.MonadTo see compiler-level flags and pragmas, use:

λ: :set

options currently set: none.

base language is: Haskell2010

with the following modifiers:

-XNoDatatypeContexts

-XNondecreasingIndentation

GHCi-specific dynamic flag settings:

other dynamic, non-language, flag settings:

-fimplicit-import-qualified

warning settings:

λ: :showi language

base language is: Haskell2010

with the following modifiers:

-XNoDatatypeContexts

-XNondecreasingIndentation

-XExtendedDefaultRulesLanguage extensions and compiler pragmas can be set at the prompt. See the Flag Reference for the vast collection of compiler flag options.

Several commands for the interactive shell have shortcuts:

| Function | |

|---|---|

+t |

Show types of evaluated expressions |

+s |

Show timing and memory usage |

+m |

Enable multi-line expression delimited by :{ and :}. |

λ: :set +t

λ: []

[]

it :: [a]λ: :set +s

λ: foldr (+) 0 [1..25]

325

it :: Prelude.Integer

(0.02 secs, 4900952 bytes)λ: :{

λ:| let foo = do

λ:| putStrLn "hello ghci"

λ:| :}

λ: foo

"hello ghci"The configuration for the GHCi shell can be customized globally by defining a ghci.conf in $HOME/.ghc/ or in the current working directory as ./.ghci.conf.

For example, we can add a command to use the Hoogle type search from within GHCi. First, install hoogle:

cabal install hoogleThen, we can enable the search functionality by adding a command to our ghci.conf:

:set prompt "λ: "

:def hlint const . return $ ":! hlint \"src\""

:def hoogle \s -> return $ ":! hoogle --count=15 \"" ++ s ++ "\""λ: :hoogle (a -> b) -> f a -> f b

Data.Traversable fmapDefault :: Traversable t => (a -> b) -> t a -> t b

Prelude fmap :: Functor f => (a -> b) -> f a -> f bFor reasons of sexiness, it is desirable to set your GHC prompt to a λ or a λΠ. Only if you're into that lifestyle, though.

:set prompt "λ: "

:set prompt "ΠΣ: "GHCi Performance

For large projects, GHCi with the default flags can use quite a bit of memory and take a long time to compile. To speed compilation by keeping artificats for compiled modules around, we can enable object code compilation instead of bytecode.

:set -fobject-codeEnabling object code compliation may complicate type inference, since type information provided to the shell can sometimes be less informative than source-loaded code. This under specificity can result in breakage with some langauge extensions. In that case, you can temporarily reenable bytecode compilation on a per module basis with the -fbyte-code flag.

:set -fbyte-code

:load MyModule.hsIf you all you need is to typecheck your code in the interactive shell, then disabling code generation entirely makes reloading code almost instantaneous:

:set -fno-codeEditor Integration

Haskell has a variety of editor tools that can be used to provide interactive development feedback and functionality such as querying types of subexpressions, linting, type checking, and code completion.

Several prepackaged setups exist to expedite the process of setting up many of the programmer editors for Haskell development. In particular, using ghc-mod can remarkably improve programmer efficiency and productivity because the project attempts to implement features common to modern IDEs.

Vim

Emacs

Atom

Bottoms

The bottom is a singular value that inhabits every type. When this value is evaluated, the semantics of Haskell no longer yield a meaningful value. In other words, further operations on the value cannot be defined in Haskell. A bottom value is usually written as the symbol ⊥, ( i.e. the compiler flipping you off ). Several ways exist to express bottoms in Haskell code.

For instance, undefined is an easily called example of a bottom value. This function has type a but lacks any type constraints in its type signature. Thus, undefined is able to stand in for any type in a function body, allowing type checking to succeed, even if the function is incomplete or lacking a definition entirely. The undefined function is extremely practical for debugging or to accommodate writing incomplete programs.

undefined :: a

mean :: Num a => Vector a -> a

mean nums = (total / count) where -- Partially defined function

total = undefined

count = undefined

addThreeNums :: Num a => a -> a -> a -> a

addThreeNums n m j = undefined -- No function body declared at all

f :: a -> Complicated Type

f = undefined -- Write tomorrow, typecheck today!

-- Arbitrarily complicated types

-- welcome!Another example of a bottom value comes from the evaluation of the error function, which takes a String and returns something that can be of any type. This property is quite similar to undefined, which also can also stand in for any type.

Calling error in a function causes the compiler to throw an exception, halt the program, and print the specified error message. In the divByY function below, passing the function 0 as the divisor results in this function results in such an exception.

error :: String -> a -- Takes an error message of type

-- String and returns whatever type

-- is needed

-- Annotated code that features use of the error function.

divByY:: (Num a, Eq a, Fractional a) => a -> a -> a

divByY _ 0 = error "Divide by zero error" -- Dividing by 0 causes an error

divByY dividend divisor = dividend / divisor -- Handles defined division

A third type way to express a bottom is with an infinitely looping term:

f :: a

f = let x = x in xExamples of actual Haskell code that use this looping syntax live in the source code of the GHC.Prim module. These bottoms exist because the operations cannot be defined in native Haskell. Such operations are baked into the compiler at a very low level. However, this module exists so that Haddock can generate documentation for these primitive operations, while the looping syntax serves as a placeholder for the actual implementation of the primops.

Perhaps the most common introduction to bottoms is writing a partial function that does not have exhaustive pattern matching defined. For example, the following code has non-exhaustive pattern matching because the case expression, lacks a definition of what to do with a B:

data F = A | B

case x of

A -> ()The code snippet above is translated into the following GHC Core output. The compiler inserts an exception to account for the non-exhaustive patterns:

case x of _ {

A -> ();

B -> patError "<interactive>:3:11-31|case"

}GHC can be made more vocal about incomplete patterns using the -fwarn-incomplete-patterns and -fwarn-incomplete-uni-patterns flags.

A similar situation can arise with records. Although constructing a record with missing fields is rarely useful, it is still possible.

data Foo = Foo { example1 :: Int }

f = Foo {} -- Record defined with a missing fieldWhen the developer omits a field's definition, the compiler inserts an exception in the GHC Core representation:

Foo (recConError "<interactive>:4:9-12|a")Fortunately, GHC will warn us by default about missing record fields.

Bottoms are used extensively throughout the Prelude, although this fact may not be immediately apparent. The reasons for including bottoms are either practical or historical.

The canonical example is the head function which has type [a] -> a. This function could not be well-typed without the bottom.

import GHC.Err

import Prelude hiding (head, (!!), undefined)

-- degenerate functions

undefined :: a

undefined = error "Prelude.undefined"

head :: [a] -> a

head (x:_) = x

head [] = error "Prelude.head: empty list"

(!!) :: [a] -> Int -> a

xs !! n | n < 0 = error "Prelude.!!: negative index"

[] !! _ = error "Prelude.!!: index too large"

(x:_) !! 0 = x

(_:xs) !! n = xs !! (n-1)It is rare to see these partial functions thrown around carelessly in production code because they cause the program to halt. The preferred method for handling exceptions is to combine the use of safe variants provided in Data.Maybe with the usual fold functions maybe and either.

Another method is to use pattern matching, as shown in listToMaybe, a safer version of head described below:

listToMaybe :: [a] -> Maybe a

listToMaybe [] = Nothing -- An empty list returns Nothing

listToMaybe (a:_) = Just a -- A non-empty list returns the first element

-- wrapped in the Just context.Invoking a bottom defined in terms of error typically will not generate any position information. However, assert, which is used to provide assertions, can be short-circuited to generate position information in the place of either undefined or error calls.

import GHC.Base

foo :: a

foo = undefined

-- *** Exception: Prelude.undefined

bar :: a

bar = assert False undefined

-- *** Exception: src/fail.hs:8:7-12: Assertion failedSee: Avoiding Partial Functions

Exhaustiveness

Pattern matching in Haskell allows for the possibility of non-exhaustive patterns. For example, passing Nothing to unsafe will cause the program to crash at runtime. However, this function is an otherwise valid, type-checked program.

unsafe :: Num a => Maybe a -> Maybe a

unsafe (Just x) = Just $ x + 1Since unsafe takes a Maybe a value as its argument, two possible values are valid input: Nothing and Just a. Since the case of a Nothing was not defined in unsafe, we say that the pattern matching within that function is non-exhaustive. In other words, the function does not implement appropriate handling of all valid inputs. Instead of yielding a value, such a function will halt from an incomplete match.

Partial functions from non-exhaustivity are a controversial subject, and frequent use of non-exhaustive patterns is considered a dangerous code smell. However, the complete removal of non-exhaustive patterns from the language would itself be too restrictive and forbid too many valid programs.

Several flags exist that we can pass to the compiler to warn us about such patterns or forbid them entirely either locally or globally.

$ ghc -c -Wall -Werror A.hs

A.hs:3:1:

Warning: Pattern match(es) are non-exhaustive

In an equation for `unsafe': Patterns not matched: NothingThe -Wall or -fwarn-incomplete-patterns flag can also be added on a per-module basis by using the OPTIONS_GHC pragma.

{-# OPTIONS_GHC -Wall #-}

{-# OPTIONS_GHC -fwarn-incomplete-patterns #-}A more subtle case of non-exhaustivity is the use of implicit pattern matching with a single uni-pattern in a lambda expression. In a manner similar to the unsafe function above, a uni-pattern cannot handle all types of valid input. For instance, the function boom will fail when given a Nothing, even though the type of the lambda expression's argument is a Maybe a.

boom = \(Just a) -> somethingNon-exhaustivity arising from uni-patterns in lambda expressions occurs frequently in let or do-blocks after desugaring, because such code is translated into lambda expressions similar to boom.

boom2 = let

Just a = something

boom3 = do

Just a <- somethingGHC can warn about these cases of non-exhaustivity with the -fwarn-incomplete-uni-patterns flag.

Grossly speaking, any non-trivial program will use some measure of partial functions. It is simply a fact. Thus, there exist obligations for the programmer than cannot be manifest in the Haskell type system.

Debugger

Since GHCi version 6.8.1, a built-in debugger has been available, although its use is somewhat rare. Debugging uncaught exceptions from bottoms or asynchronous exceptions is in similar style to debugging segfaults with gdb.

λ: :set -fbreak-on-exception -- Sets option for evaluation to stop on exception

λ: :break 2 15 -- Sets a break point at line 2, column 15

λ: :trace main -- Run a function to generate a sequence of evaluation steps

λ: :hist -- Step backwards from a breakpoint through previous steps of evaluation

λ: :back -- Step backwards a single step at a time through the history

λ: :forward -- Step forward a single step at a time through the historyStack Traces

With runtime profiling enabled, GHC can also print a stack trace when a diverging bottom term (error, undefined) is hit. This action, though, requires a special flag and profiling to be enabled, both of which are disabled by default. So, for example:

import Control.Exception

f x = g x

g x = error (show x)

main = try (evaluate (f ())) :: IO (Either SomeException ())$ ghc -O0 -rtsopts=all -prof -auto-all --make stacktrace.hs

./stacktrace +RTS -xcAnd indeed, the runtime tells us that the exception occurred in the function g and enumerates the call stack.

*** Exception (reporting due to +RTS -xc): (THUNK_2_0), stack trace:

Main.g,

called from Main.f,

called from Main.main,

called from Main.CAF

--> evaluated by: Main.main,

called from Main.CAFIt is best to run this code without optimizations applied -O0 so as to preserve the original call stack as represented in the source. With optimizations applied, GHC will rearrange the program in rather drastic ways, resulting in what may be an entirely different call stack.

See:

Trace

Since Haskell is a pure language, it has the unique property that most code is introspectable on its own. As such, using printf to display the state of the program at critical times throughout execution is often unnecessary because we can simply open GHCi and test the function. Nevertheless, Haskell does come with an unsafe trace function which can be used to perform arbitrary print statements outside of the IO monad.

import Debug.Trace

example1 :: Int

example1 = trace "impure print" 1

example2 :: Int

example2 = traceShow "tracing" 2

example3 :: [Int]

example3 = [trace "will not be called" 3]

main :: IO ()

main = do

print example1

print example2

print $ length example3

-- impure print

-- 1

-- "tracing"

-- 2

-- 1Trace uses unsafePerformIO under the hood and should not be used in stable code.

In addition to the trace function, several monadic trace variants are quite common.

import Text.Printf

import Debug.Trace

traceM :: (Monad m) => String -> m ()

traceM string = trace string $ return ()

traceShowM :: (Show a, Monad m) => a -> m ()

traceShowM = traceM . show

tracePrintfM :: (Monad m, PrintfArg a) => String -> a -> m ()

tracePrintfM s = traceM . printf sType Holes

Since the release of GHC 7.8, typed holes allow for debugging incomplete programs. By placing an underscore on any value on the right hand-side of a declaration, GHC will throw an error during type-checking. Such an error reflects what type the value in the position of the type hole could be in order for the program to type-check successfully.

instance Functor [] where

fmap f (x:xs) = f x : fmap f _[1 of 1] Compiling Main ( src/typedhole.hs, interpreted )

src/typedhole.hs:7:32:

Found hole ‘_’ with type: [a]

Where: ‘a’ is a rigid type variable bound by

the type signature for fmap :: (a -> b) -> [a] -> [b]

at src/typedhole.hs:7:3

Relevant bindings include

xs :: [a] (bound at src/typedhole.hs:7:13)

x :: a (bound at src/typedhole.hs:7:11)

f :: a -> b (bound at src/typedhole.hs:7:8)

fmap :: (a -> b) -> [a] -> [b] (bound at src/typedhole.hs:7:3)

In the second argument of ‘fmap’, namely ‘_’

In the second argument of ‘(:)’, namely ‘fmap f _’

In the expression: f x : fmap f _

Failed, modules loaded: none.GHC has rightly suggested that the expression needed to finish the program is xs :: [a].

Deferred Type Errors

Since the release of version 7.8, GHC supports the option of treating type errors as runtime errors. With this option enabled, programs will run, but they will fail when a mistyped expression is evaluated. This feature is enabled with the -fdefer-type-errors flag in three ways: at the module level, when compiled from the command line, or inside of a GHCi interactive session.

For instance, the program below will compile:

{-# OPTIONS_GHC -fdefer-type-errors #-} -- Enable deferred type

-- errors at module level

x :: ()

x = print 3

y :: Char

y = 0

z :: Int

z = 0 + "foo"

main :: IO ()

main = do

print xHowever, when a pathological term is evaluated at runtime, we'll see a message like:

defer: defer.hs:4:5:

Couldn't match expected type ‘()’ with actual type ‘IO ()’

In the expression: print 3

In an equation for ‘x’: x = print 3

(deferred type error)This error tells us that while x has a declared type of (), the body of the function print 3 has a type of IO (). However, if the term is never evaluated, GHC will not throw an exception.

ghcid

ghcid is a lightweight IDE hook that allows continuous feedback whenever code is updated. It can be run from the command line in the root of the cabal project directory by specifying a command to run (e.g. ghci, cabal repl, or stack repl).

ghcid --command="cabal repl" # Run cabal repl under ghcid

ghcid --command="stack repl" # Run stack repl under ghcid

ghcid --command="ghci baz.hs" # Open baz.hs under ghcidWhen a Haskell module is loaded into ghcid, the code is evaluated in order to provide the user with any errors or warnings that would happen at compile time. When the developer edits and saves code loaded into ghcid, the program automatically reloads and evaluates the code for errors and warnings.

Haddock

Haddock is the automatic documentation generation tool for Haskell source code. It integrates with the usual cabal toolchain. In this section, we will explore how to document code so that Haddock can generate documentation successfully.

Several frequent comment patterns are used to document code for Haddock. The first of these methods uses -- | to delineate the beginning of a comment:

-- | Documentation for f

f :: a -> a

f = ...Multiline comments are also possible:

-- | Multiline documentation for the function

-- f with multiple arguments.

fmap :: Functor f =>

=> (a -> b) -- ^ function

-> f a -- ^ input

-> f b -- ^ output-- ^ is also used to comment Constructors or Record fields:

data T a b

= A a -- ^ Documentation for A

| B b -- ^ Documentation for B

data R a b = R

{ f1 :: a -- ^ Documentation for the field f1

, f2 :: b -- ^ Documentation for the field f2

}Elements within a module (i.e. value, types, classes) can be hyperlinked by enclosing the identifier in single quotes:

data T a b

= A a -- ^ Documentation for 'A'

| B b -- ^ Documentation for 'B'Modules themselves can be referenced by enclosing them in double quotes:

-- | Here we use the "Data.Text" library and import

-- the 'Data.Text.pack' function.haddock also allows the user to include blocks of code within the generated documentation. Two methods of demarcating the code blocks exist in haddock. For example, enclosing a code snippet in @ symbols marks it as a code block:

-- | An example of a code block.

--

-- @

-- f x = f (f x)

-- @Similarly, it's possible to use bird tracks (>) in a comment line to set off a code block. This usage is very similar to Bird style Literate Haskell.

-- | A similar code block example that uses bird tracks (i.e. '>')

-- > f x = f (f x)Snippets of interactive shell sessions can also be included in haddock documentation. In order to denote the beginning of code intended to be run in a REPL, the >>> symbol is used:

-- | Example of an interactive shell session embedded within documentation

--

-- >>> factorial 5

-- 120Headers for specific blocks can be added by prefacing the comment in the module block with a *:

module Foo (

-- * My Header

example1,

example2

)Sections can also be delineated by $ blocks that pertain to references in the body of the module:

module Foo (

-- $section1

example1,

example2

)

-- $section1

-- Here is the documentation section that describes the symbols

-- 'example1' and 'example2'.Links can be added with the following syntax:

<url text>Images can also be included, so long as the path is either absolute or relative to the directory in which haddock is run.

<<diagram.png title>>haddock options can also be specified with pragmas in the source, either at the module or project level.

{-# OPTIONS_HADDOCK show-extensions, ignore-exports #-}| Option | Description |

|---|---|

| ignore-exports | Ignores the export list and includes all signatures in scope. |

| not-home | Module will not be considered in the root documentation. |

| show-extensions | Annotates the documentation with the language extensions used. |

| hide | Forces the module to be hidden from Haddock. |

| prune | Omits definitions with no annotations. |

Monads

Eightfold Path to Monad Satori

Much ink has been spilled waxing lyrical about the supposed mystique of monads. Instead, I suggest a path to enlightenment:

- Don't read the monad tutorials.

- No really, don't read the monad tutorials.

- Learn about Haskell types.

- Learn what a typeclass is.

- Read the Typeclassopedia.

- Read the monad definitions.

- Use monads in real code.

- Don't write monad-analogy tutorials.

In other words, the only path to understanding monads is to read the fine source, fire up GHC, and write some code. Analogies and metaphors will not lead to understanding.

Monadic Myths

The following are all false:

- Monads are impure.

- Monads are about effects.

- Monads are about state.

- Monads are about imperative sequencing.

- Monads are about IO.

- Monads are dependent on laziness.

- Monads are a "back-door" in the language to perform side-effects.

- Monads are an embedded imperative language inside Haskell.

- Monads require knowing abstract mathematics.

- Monads are unique to Haskell.

See: What a Monad Is Not

Monadic Methods

Monads are not complicated. They are implemented as a typeclass with two methods, return and (>>=) (pronounced "bind"). In order to implement a Monad instance, these two functions must be defined in accordance with the arity described in the typeclass definition:

class Monad m where

return :: a -> m a -- N.B. 'm' refers to a type constructor

-- (e.g., Maybe, Either, etc.) that

-- implements the Monad typeclass

(>>=) :: m a -> (a -> m b) -> m bThe first type signature in the Monad class definition is for return. Any preconceptions one might have for the word "return" should be discarded: It has an entirely different meaning in the context of Haskell and acts very differently than in languages like C, Python, or Java. Instead of being the final arbiter of what value a function produces, return in Haskell injects a value of type a into a monadic context (e.g., Maybe, Either, etc.), which is denoted as m a.

The other function essential to implementing a Monad instance is (>>=). This infix takes two arguments. On its left side is a value with type m a, while on the right side is a function with type (a -> m b). The bind operation results in a final value of type m b.

A third, auxiliary function ((>>)) is defined in terms of the bind operation that discards its argument.

(>>) :: Monad m => m a -> m b -> m b

m >> k = m >>= \_ -> kThis definition says that (>>) has a left and right argument which are monadic with types m a and m b respectively, while the infix returns a value of type m b. The actual implementation of (>>) says that when m is passed to (>>) with k on the right, the value k will always be returned.

Laws

In addition to specific implementations of (>>=) and return, all monad instances must satisfy three laws.

Law 1

The first law says that when return a is passed through a (>>=) into a function f, this expression is exactly equivalent to f a.

return a >>= f ≡ f a -- N.B. 'a' refers to a value, not a typeIn discussing the next two laws, we'll refer to a value m. This notation is shorthand for value wrapped in a monadic context. Such a value has type m a, and could be represented more concretely by values like Nothing, Just x, or Right x. It is important to note that some of these concrete instantiations of the value m have multiple components. In discussing the second and third monad laws, we'll see some examples of how this plays out.

Law 2

The second law states that a monadic value m passed through (>>=) into return is exactly equivalent to itself. In other words, using bind to pass a monadic value to return does not change the initial value.

m >>= return ≡ m -- 'm' here refers to a value that has type 'm a'A more explicit way to write the second Monad law exists. In this following example code, the first expression shows how the second law applies to values represented by non-nullary type constructors. The second snippet shows how a value represented by a nullary type constructor works within the context of the second law.

(SomeMonad val) >>= return ≡ SomeMonad val -- 'SomeMonad val' has type 'm a' just

-- like 'm' from the first example of the

-- second law

NullaryMonadType >>= return ≡ NullaryMonadTypeLaw 3

While the first two laws are relatively clear, the third law may be more difficult to understand. This law states that when a monadic value m is passed through (>>=) to the function f and then the result of that expression is passed to >>= g, the entire expression is exactly equivalent to passing m to a lambda expression that takes one parameter x and outputs the function f applied to x. By the definition of bind, f x must return a value wrapped in the same Monad. Because of this property, the resultant value of that expression can be passed through (>>=) to the function g, which also returns a monadic value.

(m >>= f) >>= g ≡ m >>= (\x -> f x >>= g) -- Like in the last law, 'm' has

-- has type 'm a'. The functions 'f'

-- and 'g' have types '(a -> m b)'

-- and '(b -> m c)' respectivelyAgain, it is possible to write this law with more explicit code. Like in the explicit examples for law 2, m has been replaced by SomeMonad val in order to be very clear that there can be multiple components to a monadic value. Although little has changed in the code, it is easier to see what value--namely, val--corresponds to the x in the lambda expression. After SomeMonad val is passed through (>>=) to f, the function f operates on val and returns a result still wrapped in the SomeMonad type constructor. We can call this new value SomeMonad newVal. Since it is still wrapped in the monadic context, SomeMonad newVal can thus be passed through the bind operation into the function g.

((SomeMonad val) >>= f) >>= g ≡ (SomeMonad val) >>= (\x -> f x >>= g)See: Monad Laws

Do Notation

Monadic syntax in Haskell is written in a sugared form, known as do notation. The advantages of this special syntax are that it is easier to write and is entirely equivalent to just applications of the monad operations. The desugaring is defined recursively by the rules:

do { a <- f ; m } ≡ f >>= \a -> do { m } -- bind 'f' to a, proceed to desugar

-- 'm'

do { f ; m } ≡ f >> do { m } -- evaluate 'f', then proceed to

-- desugar m

do { m } ≡ mThus, through the application of the desugaring rules, the following expressions are equivalent:

do

a <- f -- f, g, and h are bound to the names a,

b <- g -- b, and c. These names are then passed

c <- h -- to 'return' to ensure that all values

return (a, b, c) -- are wrapped in the appropriate monadic

-- context

do { -- N.B. '{}' and ';' characters are

a <- f; -- rarely used in do-notation

b <- g;

c <- h;

return (a, b, c)

}

f >>= \a ->

g >>= \b ->

h >>= \c ->

return (a, b, c)If one were to write the bind operator as an uncurried function ( this is not how Haskell uses it ) the same desugaring might look something like the following chain of nested binds with lambdas.

bindMonad(f, lambda a:

bindMonad(g, lambda b:

bindMonad(h, lambda c:

returnMonad (a,b,c))))In the do-notation, the monad laws from above are equivalently written:

Law 1

do y <- return x

f y

= do f xLaw 2

do x <- m

return x

= do mLaw 3

do b <- do a <- m

f a

g b

= do a <- m

b <- f a

g b

= do a <- m

do b <- f a

g bSee: Haskell 2010: Do Expressions

Maybe

The Maybe monad is the simplest first example of a monad instance. The Maybe monad models computations which fail to yield a value at any point during computation.

The Maybe type has two value constructors. The first, Just, is a unary constructor representing a successful computation, while the second, Nothing, is a nullary constructor that represents failure.

data Maybe a = Just a | NothingThe monad instance describes the implementation of (>>=) for Maybe by pattern matching on the possible inputs that could be passed to the bind operation (i.e., Nothing or Just x). The instance declaration also provides an implementation of return, which in this case is simply Just.

instance Monad Maybe where

(Just x) >>= k = k x -- 'k' is a function with type (a -> Maybe a)

Nothing >>= k = Nothing

return = Just -- Just's type signature is (a -> Maybe a), in

-- other words, extremely similar to the

-- type of 'return' in the typeclass

-- declaration above.The following code shows some simple operations to do within the Maybe monad.

In the first example, The value Just 3 is passed via (>>=) to the lambda function \x -> return (x + 1). x refers to the Int portion of Just 3, and we can use x in the second half of the lambda expression, where return (x + 1) evaluates to Just 4, indicating a successful computation.

(Just 3) >>= (\x -> return (x + 1))

-- Just 4In the second example, the value Nothing is passed via (>>=) to the same lambda function as in the previous example. However, according to the Maybe Monad instance, whenever Nothing is bound to a function, the expression's result will be Nothing.

Nothing >>= (\x -> return (x + 1))

-- NothingIn the next example, return is applied to 4 and returns Just 4.

return 4 :: Maybe Int

-- Just 4The next code examples show the use of do notation within the Maybe monad to do addition that might fail. Desugared examples are provided as well.

example1 :: Maybe Int

example1 = do

a <- Just 3 -- Bind 3 to name a

b <- Just 4 -- Bind 4 to name b

return $ a + b -- Evaluate (a + b), then use 'return' to ensure

-- the result is in the Maybe monad in order to

-- satisfy the type signature

-- Just 7

desugared1 :: Maybe Int

desugared1 = Just 3 >>= \a -> -- This example is the desugared

Just 4 >>= \b -> -- equivalent to example1

return $ a + b

-- Just 7

example2 :: Maybe Int

example2 = do

a <- Just 3 -- Bind 3 to name a

b <- Nothing -- Bind Nothing to name b

return $ a + b

-- Nothing -- This result might be somewhat surprising, since

-- addition within the Maybe monad can actually

-- return 'Nothing'. This result occurs because one

-- of the values, Nothing, indicates computational

-- failure. Since the computation failed at one

-- step within the process, the whole computation

-- fails, leaving us with 'Nothing' as the final

-- result.

desugared2 :: Maybe Int

desugared2 = Just 3 >>= \a -> -- This example is the desugared

Nothing >>= \b -> -- equivalent to example2

return $ a + b

-- NothingList

The List monad is the second simplest example of a monad instance. As always, this monad implements both (>>=) and return. The definition of bind says that when the list m is bound to a function f, the result is a concatenation of map f over the list m. The return method simply takes a single value x and injects into a singleton list [x].

instance Monad [] where

m >>= f = concat (map f m) -- 'm' is a list

return x = [x]In order to demonstrate the List monad's methods, we will define two functions: m and f. m is a simple list, while f is a function that takes a single Int and returns a two element list [1, 0].

m :: [Int]

m = [1,2,3,4]

f :: Int -> [Int]

f = \x -> [1,0] -- 'f' always returns [1, 0]The evaluation proceeds as follows:

m >>= f

==> [1,2,3,4] >>= \x -> [1,0]

==> concat (map (\x -> [1,0]) [1,2,3,4])

==> concat ([[1,0],[1,0],[1,0],[1,0]])

==> [1,0,1,0,1,0,1,0]The list comprehension syntax in Haskell can be implemented in terms of the list monad. List comprehensions can be considered syntactic sugar for more obviously monadic implementations. Examples a and b illustrate these use cases.

The first example (a) illustrates how to write a list comprehension. Although the syntax looks strange at first, there are elements of it that may look familiar. For instance, the use of <- is just like bind in a do notation: It binds an element of a list to a name. However, one major difference is apparent: a seems to lack a call to return. Not to worry, though, the [] fills this role. This syntax can be easily desugared by the compiler to an explicit invocation of return. Furthermore, it serves to remind the user that the computation takes place in the List monad.

a = [

f x y | -- Corresponds to 'f x y' in example b

x <- xs,

y <- ys,

x == y -- Corresponds to 'guard $ x == y' in example b

]The second example (b) shows the list comprehension above rewritten with do notation:

-- Identical to `a`

b = do

x <- xs

y <- ys

guard $ x == y -- Corresponds to 'x == y' in example a

return $ f x y -- Corresponds to the '[]' and 'f x y' in example aThe final examples are further illustrations of the List monad. The functions below each return a list of 3-tuples which contain the possible combinations of the three lists that get bound the names a, b, and c. N.B.: Only values in the list bound to a can be used in a position of the tuple; the same fact holds true for the lists bound to b and c.

example :: [(Int, Int, Int)]

example = do

a <- [1,2]

b <- [10,20]

c <- [100,200]

return (a,b,c)

-- [(1,10,100),(1,10,200),(1,20,100),(1,20,200),(2,10,100),(2,10,200),(2,20,100),(2,20,200)]

desugared :: [(Int, Int, Int)]

desugared = [1, 2] >>= \a ->

[10, 20] >>= \b ->

[100, 200] >>= \c ->

return (a, b, c)

-- [(1,10,100),(1,10,200),(1,20,100),(1,20,200),(2,10,100),(2,10,200),(2,20,100),(2,20,200)]IO

Perhaps the most (in)famous example in Haskell of a type that forms a monad is IO. A value of type IO a is a computation which, when performed, does some I/O before returning a value of type a. These computations are called actions. IO actions executed in main are the means by which a program can operate on or access information in the external world. IO actions allow the program to do many things, including, but not limited to:

- Print a

Stringto the terminal - Read and parse input from the terminal

- Read from or write to a file on the system

- Establish an

sshconnection to a remote computer - Take input from a radio antenna for singal processing

Conceptualizing I/O as a monad enables the developer to access information outside the program, but operate on the data with pure functions. The following examples will show how we can use IO actions and IO values to receive input from stdin and print to stdout.

Perhaps the most immediately useful function for doing I/O in Haskell is putStrLn. This function takes a String and returns an IO (). Calling it from main will result in the String being printed to stdout followed by a newline character.

putStrLn :: String -> IO ()Here is some code that prints a couple of lines to the terminal. The first invocation of putStrLn is executed, causing the String to be printed to stdout. The result is bound to a lambda expression that discards its argument, and then the next putStrLn is executed.

main :: IO ()

main = putStrLn "Vesihiisi sihisi hississäään." >>=

\_ -> putStrLn "Or in English: 'The water devil was hissing

in her elevator'."

-- Sugared code, written with do notation

main :: IO ()

main = do putStrLn "Vesihiisi sihisi hississäään."

putStrLn "Or in English: 'The water devil was hissing in her

elevator'."Another useful function is getLine which has type IO String. This function gets a line of input from stdin. The developer can then bind this line to a name in order to operate on the value within the program.

getLine :: IO StringThe code below demonstrates a simple combination of these two functions as well as desugaring IO code. First, putStrLn prints a String to stdout to ask the user to supply their name, with the result being bound to a lambda that discards it argument. Then, getLine is executed, supplying a prompt to the user for entering their name. Next, the resultant IO String is bound to name and passed to putStrLn. Finally, the program prints the name to the terminal.

main :: IO ()

main = do putStrLn "What is your name: "

name <- getLine

putStrLn nameThe next code block is the desugared equivalent of the previous example; however, the uses of (>>=) are made explict.

main :: IO ()

main = putStrLn "What is your name:" >>=

\_ -> getLine >>=

\name -> putStrLn nameOur final example executes in the same way as the previous two examples. This example, though, uses the special (>>) operator to take the place of binding a result to the lamda that discards its argument.

main :: IO ()

main = putStrLn "What is your name: " >> (getLine >>= (\name -> putStrLn name))See: Haskell 2010: Basic/Input Output

What's the point?

Although it is difficult, if not impossible, to touch, see, or otherwise physically interact with a monad, this construct has some very interesting implications for programmers. For instance, consider the non-intuitive fact that we now have a uniform interface for talking about three very different, but foundational ideas for programming: Failure, Collections and Effects.

Let's write down a new function called sequence which folds a function mcons over a list of monadic computations. We can think of mcons as analogous to the list constructor (i.e. (a : b : [])) except it pulls the two list elements out of two monadic values (p,q) by means of bind. The bound values are then joined with the list constructor :, before finally being rewrapped in the appropriate monadic context with return.

sequence :: Monad m => [m a] -> m [a]

sequence = foldr mcons (return [])

mcons :: Monad m => m t -> m [t] -> m [t]

mcons p q = do

x <- p -- 'x' refers to a singleton value

y <- q -- 'y' refers to a list. Because of this fact, 'x' can be

return (x:y) -- prepended to itWhat does this function mean in terms of each of the monads discussed above?

Maybe

Sequencing a list of values within the Maybe context allows us to collect the results of a series of computations which can possibly fail. However, sequence yields the aggregated values only if each computation succeeds. In other words, if even one of the Maybe values in the initial list passed to sequenceis a Nothing, the result of sequence will also be Nothing.

sequence :: [Maybe a] -> Maybe [a]sequence [Just 3, Just 4]

-- Just [3,4]

sequence [Just 3, Just 4, Nothing] -- Since one of the results is Nothing,

-- Nothing -- the whole computation failsList

The bind operation for the list monad forms the pairwise list of elements from the two operands. Thus, folding the binds contained in mcons over a list of lists with sequence implements the general Cartesian product for an arbitrary number of lists.

sequence :: [[a]] -> [[a]]sequence [[1,2,3],[10,20,30]]

-- [[1,10],[1,20],[1,30],[2,10],[2,20],[2,30],[3,10],[3,20],[3,30]]IO

Applying sequence within the IO context results in still a different result. The function takes a list of IO actions, performs them sequentially, and then returns the list of resulting values in the order sequenced.

sequence :: [IO a] -> IO [a]sequence [getLine, getLine, getLine]

-- a -- a, b, and 9 are the inputs given by the

-- b -- user at the prompt

-- 9

-- ["a", "b", "9"] -- All inputs are returned in a list as

-- an IO [String].So there we have it, three fundamental concepts of computation that are normally defined independently of each other actually all share this similar structure. This unifying pattern can be abstracted out and reused to build higher abstractions that work for all current and future implementations. If you want a motivating reason for understanding monads, this is it! These insights are the essence of what I wish I knew about monads looking back.

See: Control.Monad

Reader Monad

The reader monad lets us access shared immutable state within a monadic context.

ask :: Reader r r

asks :: (r -> a) -> Reader r a

local :: (r -> r) -> Reader r a -> Reader r a

runReader :: Reader r a -> r -> aimport Control.Monad.Reader

data MyContext = MyContext

{ foo :: String

, bar :: Int

} deriving (Show)

computation :: Reader MyContext (Maybe String)

computation = do

n <- asks bar

x <- asks foo

if n > 0

then return (Just x)

else return Nothing

ex1 :: Maybe String

ex1 = runReader computation $ MyContext "hello" 1

ex2 :: Maybe String

ex2 = runReader computation $ MyContext "haskell" 0A simple implementation of the Reader monad:

newtype Reader r a = Reader { runReader :: r -> a }

instance Monad (Reader r) where

return a = Reader $ \_ -> a

m >>= k = Reader $ \r -> runReader (k (runReader m r)) r

ask :: Reader a a

ask = Reader id

asks :: (r -> a) -> Reader r a

asks f = Reader f

local :: (r -> r) -> Reader r a -> Reader r a

local f m = Reader $ runReader m . fWriter Monad

The writer monad lets us emit a lazy stream of values from within a monadic context.

tell :: w -> Writer w ()

execWriter :: Writer w a -> w

runWriter :: Writer w a -> (a, w)import Control.Monad.Writer

type MyWriter = Writer [Int] String

example :: MyWriter

example = do

tell [1..3]

tell [3..5]

return "foo"

output :: (String, [Int])

output = runWriter example

-- ("foo", [1, 2, 3, 3, 4, 5])A simple implementation of the Writer monad:

import Data.Monoid

newtype Writer w a = Writer { runWriter :: (a, w) }

instance Monoid w => Monad (Writer w) where

return a = Writer (a, mempty)

m >>= k = Writer $ let

(a, w) = runWriter m

(b, w') = runWriter (k a)

in (b, w `mappend` w')

execWriter :: Writer w a -> w

execWriter m = snd (runWriter m)

tell :: w -> Writer w ()

tell w = Writer ((), w)This implementation is lazy, so some care must be taken that one actually wants to only generate a stream of thunks. Most often the lazy writer is not suitable for use, instead implement the equivalent structure by embedding some monomial object inside a StateT monad, or using the strict version.

import Control.Monad.Writer.StrictState Monad

The state monad allows functions within a stateful monadic context to access and modify shared state.

runState :: State s a -> s -> (a, s)

evalState :: State s a -> s -> a

execState :: State s a -> s -> simport Control.Monad.State

test :: State Int Int

test = do

put 3

modify (+1)

get

main :: IO ()

main = print $ execState test 0The state monad is often mistakenly described as being impure, but it is in fact entirely pure and the same effect could be achieved by explicitly passing state. A simple implementation of the State monad takes only a few lines:

newtype State s a = State { runState :: s -> (a,s) }

instance Monad (State s) where

return a = State $ \s -> (a, s)

State act >>= k = State $ \s ->

let (a, s') = act s

in runState (k a) s'

get :: State s s

get = State $ \s -> (s, s)

put :: s -> State s ()

put s = State $ \_ -> ((), s)

modify :: (s -> s) -> State s ()

modify f = get >>= \x -> put (f x)

evalState :: State s a -> s -> a

evalState act = fst . runState act

execState :: State s a -> s -> s

execState act = snd . runState actMonad Tutorials

So many monad tutorials have been written that it begs the question: what makes monads so difficult when first learning Haskell? I hypothesize there are three aspects to why this is so:

- There are several levels on indirection with desugaring.

A lot of the Haskell we write is radically rearranged and transformed into an entirely new form under the hood.

Most monad tutorials will not manually expand out the do-sugar. This leaves the beginner thinking that monads are a way of dropping into a pseudo-imperative language inside of code and further fuels that misconception that specific instances like IO are monads in their full generality.

main = do

x <- getLine

putStrLn x

return ()Being able to manually desugar is crucial to understanding.

main =

getLine >>= \x ->

putStrLn x >>= \_ ->

return ()- Asymmetric binary infix operators for higher order functions are not common in other languages.

(>>=) :: Monad m => m a -> (a -> m b) -> m bOn the left hand side of the operator we have an m a and on the right we have a -> m b. Although some languages do have infix operators that are themselves higher order functions, it is still a rather rare occurrence.

So with a function desugared, it can be confusing that (>>=) operator is in fact building up a much larger function by composing functions together.

main =

getLine >>= \x ->

putStrLn >>= \_ ->

return ()Written in prefix form, it becomes a little bit more digestible.

main =

(>>=) getLine (\x ->

(>>=) putStrLn (\_ ->

return ()

)

)Perhaps even removing the operator entirely might be more intuitive coming from other languages.

main = bind getLine (\x -> bind putStrLn (\_ -> return ()))

where

bind x y = x >>= y- Ad-hoc polymorphism is not commonplace in other languages.

Haskell's implementation of overloading can be unintuitive if one is not familiar with type inference. It is abstracted away from the user, but the (>>=) or bind function is really a function of 3 arguments with the extra typeclass dictionary argument ($dMonad) implicitly threaded around.

main $dMonad = bind $dMonad getLine (\x -> bind $dMonad putStrLn (\_ -> return $dMonad ()))Except in the case where the parameter of the monad class is unified ( through inference ) with a concrete class instance, in which case the instance dictionary ($dMonadIO) is instead spliced throughout.

main :: IO ()

main = bind $dMonadIO getLine (\x -> bind $dMonadIO putStrLn (\_ -> return $dMonadIO ()))Now, all of these transformations are trivial once we understand them, they're just typically not discussed. In my opinion the fundamental fallacy of monad tutorials is not that intuition for monads is hard to convey ( nor are metaphors required! ), but that novices often come to monads with an incomplete understanding of points (1), (2), and (3) and then trip on the simple fact that monads are the first example of a Haskell construct that is the confluence of all three.

Monad Transformers

mtl / transformers

So, the descriptions of Monads in the previous chapter are a bit of a white lie. Modern Haskell monad libraries typically use a more general form of these, written in terms of monad transformers which allow us to compose monads together to form composite monads. The monads mentioned previously are subsumed by the special case of the transformer form composed with the Identity monad.

| Monad | Transformer | Type | Transformed Type |

|---|---|---|---|

| Maybe | MaybeT | Maybe a |

m (Maybe a) |

| Reader | ReaderT | r -> a |

r -> m a |

| Writer | WriterT | (a,w) |

m (a,w) |

| State | StateT | s -> (a,s) |

s -> m (a,s) |

type State s = StateT s Identity

type Writer w = WriterT w Identity

type Reader r = ReaderT r Identity

instance Monad m => MonadState s (StateT s m)

instance Monad m => MonadReader r (ReaderT r m)

instance (Monoid w, Monad m) => MonadWriter w (WriterT w m)In terms of generality the mtl library is the most common general interface for these monads, which itself depends on the transformers library which generalizes the "basic" monads described above into transformers.

Transformers

At their core monad transformers allow us to nest monadic computations in a stack with an interface to exchange values between the levels, called lift.

lift :: (Monad m, MonadTrans t) => m a -> t m a

liftIO :: MonadIO m => IO a -> m aclass MonadTrans t where

lift :: Monad m => m a -> t m a

class (Monad m) => MonadIO m where

liftIO :: IO a -> m a

instance MonadIO IO where

liftIO = idJust as the base monad class has laws, monad transformers also have several laws:

Law #1

lift . return = returnLaw #2

lift (m >>= f) = lift m >>= (lift . f)Or equivalently:

Law #1

lift (return x)

= return xLaw #2

do x <- lift m

lift (f x)

= lift $ do x <- m

f xIt's useful to remember that transformers compose outside-in but are unrolled inside out.

See: Monad Transformers: Step-By-Step

Basics

The most basic use requires us to use the T-variants for each of the monad transformers in the outer layers and to explicitly lift and return values between the layers. Monads have kind (* -> *), so monad transformers which take monads to monads have ((* -> *) -> * -> *):

Monad (m :: * -> *)

MonadTrans (t :: (* -> *) -> * -> *)So, for example, if we wanted to form a composite computation using both the Reader and Maybe monads we can now put the Maybe inside of a ReaderT to form ReaderT t Maybe a.

import Control.Monad.Reader

type Env = [(String, Int)]

type Eval a = ReaderT Env Maybe a

data Expr

= Val Int

| Add Expr Expr

| Var String

deriving (Show)

eval :: Expr -> Eval Int

eval ex = case ex of

Val n -> return n

Add x y -> do

a <- eval x

b <- eval y

return (a+b)

Var x -> do

env <- ask

val <- lift (lookup x env)

return val

env :: Env

env = [("x", 2), ("y", 5)]

ex1 :: Eval Int

ex1 = eval (Add (Val 2) (Add (Val 1) (Var "x")))

example1, example2 :: Maybe Int

example1 = runReaderT ex1 env

example2 = runReaderT ex1 []The fundamental limitation of this approach is that we find ourselves lift.lift.lifting and return.return.returning a lot.

ReaderT

For example, there exist three possible forms of the Reader monad. The first is the Haskell 98 version that no longer exists, but is useful for understanding the underlying ideas. The other two are the transformers and mtl variants.

Reader

newtype Reader r a = Reader { runReader :: r -> a }

instance MonadReader r (Reader r) where

ask = Reader id

local f m = Reader (runReader m . f)ReaderT

newtype ReaderT r m a = ReaderT { runReaderT :: r -> m a }

instance (Monad m) => Monad (ReaderT r m) where

return a = ReaderT $ \_ -> return a

m >>= k = ReaderT $ \r -> do

a <- runReaderT m r

runReaderT (k a) r

instance MonadTrans (ReaderT r) where

lift m = ReaderT $ \_ -> mMonadReader

class (Monad m) => MonadReader r m | m -> r where

ask :: m r

local :: (r -> r) -> m a -> m a

instance (Monad m) => MonadReader r (ReaderT r m) where

ask = ReaderT return

local f m = ReaderT $ \r -> runReaderT m (f r)So, hypothetically the three variants of ask would be:

ask :: Reader r r

ask :: Monad m => ReaderT r m r

ask :: MonadReader r m => m rIn practice only the last one is used in modern Haskell.

Newtype Deriving

Newtypes let us reference a data type with a single constructor as a new distinct type, with no runtime overhead from boxing, unlike an algebraic datatype with a single constructor. Newtype wrappers around strings and numeric types can often drastically reduce accidental errors.

Consider the case of using a newtype to distinguish between two different text blobs with different semantics. Both have the same runtime representation as a text object, but are distinguished statically, so that plaintext can not be accidentally interchanged with encrypted text.

newtype Plaintext = Plaintext Text

newtype Crytpotext = Cryptotext Text

encrypt :: Key -> Plaintext -> Cryptotext

decrypt :: Key -> Cryptotext -> PlaintextThe other common use case is using newtypes to derive logic for deriving custom monad transformers in our business logic. Using -XGeneralizedNewtypeDeriving we can recover the functionality of instances of the underlying types composed in our transformer stack.

{-# LANGUAGE GeneralizedNewtypeDeriving #-}

newtype Velocity = Velocity { unVelocity :: Double }

deriving (Eq, Ord)

v :: Velocity

v = Velocity 2.718

x :: Double

x = 2.718

-- Type error is caught at compile time even though

-- they are the same value at runtime!

err = v + x

newtype Quantity v a = Quantity a

deriving (Eq, Ord, Num, Show)

data Haskeller

type Haskellers = Quantity Haskeller Int

a = Quantity 2 :: Haskellers

b = Quantity 6 :: Haskellers

totalHaskellers :: Haskellers

totalHaskellers = a + bCouldn't match type `Double' with `Velocity'

Expected type: Velocity

Actual type: Double

In the second argument of `(+)', namely `x'

In the expression: v + xUsing newtype deriving with the mtl library typeclasses we can produce flattened transformer types that don't require explicit lifting in the transform stack. For example, here is a little stack machine involving the Reader, Writer and State monads.

{-# LANGUAGE GeneralizedNewtypeDeriving #-}

import Control.Monad.Reader

import Control.Monad.Writer

import Control.Monad.State

type Stack = [Int]

type Output = [Int]

type Program = [Instr]

type VM a = ReaderT Program (WriterT Output (State Stack)) a

newtype Comp a = Comp { unComp :: VM a }

deriving (Monad, MonadReader Program, MonadWriter Output, MonadState Stack)

data Instr = Push Int | Pop | Puts

evalInstr :: Instr -> Comp ()

evalInstr instr = case instr of

Pop -> modify tail

Push n -> modify (n:)

Puts -> do

tos <- gets head

tell [tos]

eval :: Comp ()

eval = do

instr <- ask

case instr of

[] -> return ()

(i:is) -> evalInstr i >> local (const is) eval

execVM :: Program -> Output

execVM = flip evalState [] . execWriterT . runReaderT (unComp eval)

program :: Program

program = [

Push 42,

Push 27,

Puts,

Pop,

Puts,

Pop

]

main :: IO ()

main = mapM_ print $ execVM programPattern matching on a newtype constructor compiles into nothing. For example theextractB function does not scrutinize the MkB constructor like the extractA does, because MkB does not exist at runtime, it is purely a compile-time construct.

data A = MkA Int

newtype B = MkB Int

extractA :: A -> Int

extractA (MkA x) = x

extractB :: B -> Int

extractB (MkB x) = xEfficiency

The second monad transformer law guarantees that sequencing consecutive lift operations is semantically equivalent to lifting the results into the outer monad.

do x <- lift m == lift $ do x <- m

lift (f x) f xAlthough they are guaranteed to yield the same result, the operation of lifting the results between the monad levels is not without cost and crops up frequently when working with the monad traversal and looping functions. For example, all three of the functions on the left below are less efficient than the right hand side which performs the bind in the base monad instead of lifting on each iteration.

-- Less Efficient More Efficient

forever (lift m) == lift (forever m)

mapM_ (lift . f) xs == lift (mapM_ f xs)

forM_ xs (lift . f) == lift (forM_ xs f)Monad Morphisms

The base monad transformer package provides a MonadTrans class for lifting to another monad:

lift :: Monad m => m a -> t m aBut often times we need to work with and manipulate our monad transformer stack to either produce new transformers, modify existing ones or extend an upstream library with new layers. The mmorph library provides the capacity to compose monad morphism transformation directly on transformer stacks. The equivalent of type transformer type-level map is the hoist function.

hoist :: Monad m => (forall a. m a -> n a) -> t m b -> t n bHoist takes a monad morphism (a mapping from a m a to a n a) and applies in on the inner value monad of a transformer stack, transforming the value under the outer layer.

The monad morphism generalize takes an Identity monad into any another monad m.

generalize :: Monad m => Identity a -> m aFor example, it generalizes State s a (which is StateT s Identity a) to StateT s m a.

So we can generalize an existing transformer to lift an IO layer onto it.

import Control.Monad.State

import Control.Monad.Morph

type Eval a = State [Int] a

runEval :: [Int] -> Eval a -> a

runEval = flip evalState

pop :: Eval Int

pop = do

top <- gets head

modify tail

return top

push :: Int -> Eval ()

push x = modify (x:)

ev1 :: Eval Int

ev1 = do

push 3

push 4

pop

pop

ev2 :: StateT [Int] IO ()

ev2 = do

result <- hoist generalize ev1

liftIO $ putStrLn $ "Result: " ++ show resultSee: mmorph

Language Extensions

It's important to distinguish between different categories of language extensions general and specialized.

The inherent problem with classifying the extensions into the general and specialized categories is that it's a subjective classification. Haskellers who do type system research will have a very different interpretation of Haskell than people who do web programming. As such this is a conservative assessment, as an arbitrary baseline let's consider FlexibleInstances and OverloadedStrings "everyday" while GADTs and TypeFamilies are "specialized".

Key

- Benign implies both that importing the extension won't change the semantics of the module if not used and that enabling it makes it no easier to shoot yourself in the foot.