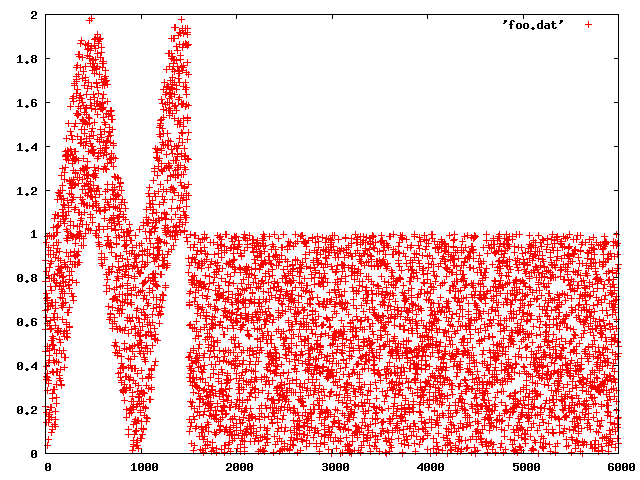

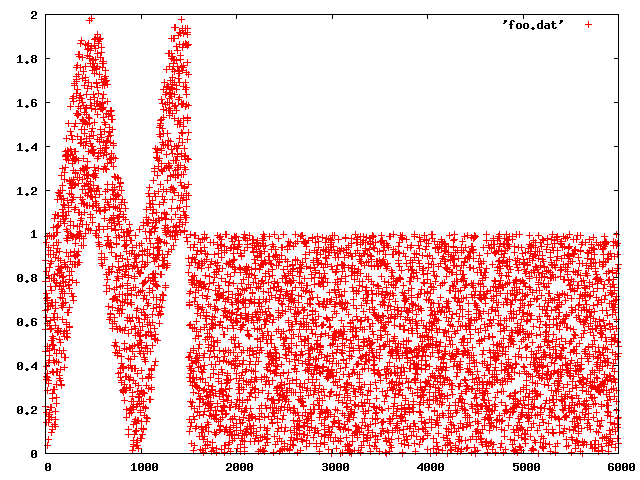

Suitably noisy data

Noise!

Someone asked a thoughtful question on Monday about folds, specifically about foldr vs foldl and foldl'.

I wasn't very happy with the answers I came up with, because I was in a bit of a rush.

Always be suspicious of teachers who get into a muddle if you spring an unexpected question!

Do they really know what they're talking about, or are they just good at reading slides?

Today, let's slow down and think about folds a little more.

Here's the definition of foldr:

foldr :: (a -> b -> b) -> b -> [a] -> b

foldr f = go

where

go (x:xs) = f x (go xs)

go z = zIt associates to the right, so here's what a use of foldr looks like, expanded:

foldr f z [a,b,c,d] = f a (f b (f c (f d z)))Suppose we want to sum the elements of a list using foldr:

foldr (+) 0 [3..6]In order for the (+) operator to add two numbers, it has to know what each one is, so at least for the usual types we work with, it's strict in both of its arguments.

(Note: (+) is not required to be strict. That behaviour depends on the instance of Num in use.)

Let's do a stepwise expansion of the foldr-based sum.

foldr (+) 0 (3:{-...-}) = 3 + {-...-}We can't produce an answer after consuming the first element of the list, because + is strict in its arguments.

foldr (+) 0 (3:4:{-...-}) = 3 + (4 + {-...-})Neither can we produce an answer after the second element.

In fact, we're building up an expression that we can't reduce to a smaller form, because we're associating to the right and we haven't seen the rest of the list yet (see the definition of foldr).

In fact, not until we reach the end of the list do we have a number on the right hand side of a +:

foldr (+) 0 (3:4:5:6:[]) = 3 + (4 + (5 + (6 + 0)))What we've done is build up a big expression that we can't evaluate until we've seen the entire list.

This is due in equal part to two factors:

foldr is right associative.

The function we're folding with is strict in both arguments.

If foldr was getting us all screwed up due to the associativity, maybe foldl will do better.

foldl :: (a -> b -> a) -> a -> [b] -> a

foldl f = go

where

go z (x:xs) = go (f z x) xs

go z _ = zLet's see an expansion.

foldl f z [a,b,c,d] = f (f (f (f z a) b) c) dOuch! Maybe we can make that clearer with infix syntax?

foldl (+) z [a,b,c,d] = (((z + a) + b) + c) + dFor summing elements, this looks better than foldr on the face of things, because at every step through the list, the function we're folding with can see both of its arguments.

But there's a catch.

None of the intermediate results are visible to the caller of foldl, so the caller cannot force those results to be evaluated.

In other words, we're still building up a big thunk!

This function is defined for us in Data.List, and it has the same type as foldl:

{-# LANGUAGE BangPatterns #-}

foldl' :: (a -> b -> a) -> a -> [b] -> a

foldl' f = go

where

go !z (x:xs) = go (f z x) xs

go !z _ = zThe crucial difference lies with the strictness annotation, which forces each intermediate result to be evaluated to WHNF.

That same sum again.

foldl' (+) 0 [1,2,3]First step:

foldl' (+) 0 (1:{-...-}) = foldl' (0+1) {-...-}

= foldl' 1 {-...-}Second step:

foldl' (+) 1 (2:{-...-}) = foldl' (1+2) {-...-}

= foldl' 2 {-...-}The structure of foldl' causes us to replace the accumulator z with a new value at each step.

The strictness annotation (we could have used seq too) causes that accumulator to be evaluated to WHNF before we continue.

Last step:

foldl' (+) 3 [] = 3I hope this makes the difference between foldl' and foldr more apparent!

It should be clear by now that plain old foldl is very rarely useful.

In fact, I've never personally found a situation where it was the right kind of fold to use.

If you find yourself thinking "I need a left fold", it's safe to assume that foldl' is what you'll need.

We've discussed the fact that using foldr with a strict function is a bad idea, because it generates a chain of thunks.

What if we used a function that was lazy in its second argument?

Hmm. Conveniently enough, we have an everyday example of just such a function: the list constructor!

What does this function do?

foldr (:) []Let's do another expansion, but this time we'll align the input list and result expression lexically:

foldr (<+>) z

(a : (b : (c : (d : [])))) =

(a <+> (b <+> (c <+> (d <+> z ))))Notice that each (:) in the input list is "replaced" by an application of (<+>) in the result, and the [] at the end is replaced with z.

(It doesn't matter what (<+>) actually does here. We're purely interested in the structures of the two expressions.)

Write a version of map that does not pattern match on its input list, but instead uses foldr:

map :: (a -> b) -> [a] -> [b]

map (+1) [1,2,3] == [2,3,4]myMap f = foldr go []

where go x xs = f x : xsWrite a version of filter that again uses foldr:

filter :: (a -> Bool) -> [a] -> [a]

filter odd [0..5] == [1,3,5]myFilter p = foldr go []

where

go x xs

| p x = x : xs

| otherwise = xsWrite an append function that has the same behaviour as the (++) operator:

(++) :: [a] -> [a] -> [a]

[1,2,3] ++ [4,5,6] == [1,2,3,4,5,6]For bonus points, make your implementation as short as possible.

append = flip (foldr (:))A handy rule of thumb for distinguishing the two:

Concurrency: "I have a number of potentially unrelated tasks to deal with."

Parallelism: "I want my single question to be answered sooner if I can throw more resources at the problem."

Many languages blur these notions together, by providing a "one size fits all" toolkit for concurrency and parallelism.

We do not need multiple CPU cores to achieve concurrent processing, nor do we even need threads.

However, a good language runtime and programming environment will offer improved throughput and latency on concurrent problems if it can use multiple cores.

The essence of parallel programming is to have multiple computing elements process parts of a problem simultaneously.

GHC provides a "baked in" way of using multiple CPU cores to do this.

To take advantage of this, we must build our executables with -threaded.

This links a Haskell program against the "threaded runtime", which can schedule lightweight Haskell threads across multiple CPU cores, and which can also run parallel programs on those cores.

In the Control.Parallel module lives an intriguing function.

par :: a -> b -> bThis is the parallel cousin to seq. It tells the threaded runtime that it may be beneficial to evaluate the first argument in parallel with the second.

Like seq, par returns the value of its second argument.

As it happens, we need another oddball function to work with par.

pseq :: a -> b -> bThis is semantically identical to seq, but with a subtle operational difference.

seq is strict in both arguments, so the compiler can take an expression like this:

a `seq` b And transform it into this:

b `seq` a `seq` bThis can be a problem when annotating code for parallelism, when we need more control over the order of evaluation.

In contrast to seq, pseq is only strict in its first argument.

This restricts the transformations that the compiler can perform, and lets us retain control of the evaluation order.

We use par when two conditions are true:

The first argument is somewhat expensive to compute.

We don't have an immediate need for the result of the first computation.

If the first argument is too cheap, then the book-keeping overhead to evaluate it in parallel will diminish or erase any performance gain.

The par operator makes use of the overlap between lazy evaluation and futures.

To implement lazy evaluation, we need thunks: a representation for expressions which are not yet evaluated but whose value may later be demanded.

A future is a computation whose value is being evaluated in parallel and which we may wait for.

par offers a way to annotate a lazy computation as being potentially profitable to evaluate in parallel.

In other words, it turns a lazy computation into a future.

In practice, par and pseq are a pain in the neck to use.

To use par effectively, we have to get some surprisingly subtle things right:

Pass an unevaluated computation to par

The unevaluated computation must be somewhat expensive

Ensure that its value will not be required by the enclosing computation for a while

Ensure that the result is shared by the rest of the program

If we miss any of 1 through 3, then we achieve little or no speedup.

If we miss 4, then the GC may get rid of the computed result before it can be used.

The monad-par package provides a library that makes parallelism easier to achieve and reason about.

cabal install monad-par

It gives us a type named Par:

newtype Par a

instance Functor Par

instance Applicative Par

instance Monad ParTo evaluate a Par computation, we use runPar:

runPar :: Par a -> aNotice that this has no side effects, so it will run deterministically.

To start a parallel computation, we use the fork action:

fork :: Par () -> Par ()Forked tasks need a way to communicate with each other, for which we use the IVar type:

data IVar a

deriving (Eq)

new :: Par (IVar a)

get :: IVar a -> Par a

put :: (NFData a) => IVar a -> a -> Par ()The IVar type is a write-once mutable reference. The get function blocks until a put has been performed.

We know that seq evaluates an expression to WHNF, but sometimes we want to completely evaluate an expression to normal form.

Enter the deeqseq package:

import Control.DeepSeq

class NFData a where

rnf :: a -> ()We can write an NFData instance for any custom type. Our rnf implementation must reduce an expression to normal form.

data Foo a = Foo Int a

instance (NFData a) => NFData (Foo a) where

rnf (Foo x y) = rnf x `seq` rnf yAn extremely common pattern is for a thread to fork several children and wait for them to finish.

We can easily capture this idea with a suitable combinator.

spawn :: (NFData a) => Par a -> Par (IVar a)

spawn act = do

i <- new

fork (put i =<< act)

return iIn fact, usually all we want is to simply wait for all children and return a list of their results.

parMapM :: (NFData b) => (a -> Par b) -> [a] -> Par [b]

parMapM f acts = do

ivars <- mapM (spawn . f) acts

mapM get ivarsSuppose we need the mean of a list of numbers. The naive way to do so would be sum xs / length xs, but this has numerical stability problems.

Here's a more stable method:

import Data.List

data T a = T !a !Int

mean :: (RealFrac a) => [a] -> a

mean = fini . foldl' go (T 0 0)

where

fini (T a _) = a

go (T m n) x = T m' n'

where m' = m + (x - m) / fromIntegral n'

n' = n + 1Supposing we have a set of sample data that we know little about, in particular its precision and variance.

This is exactly the kind of problem that the criterion benchmarking library faces: we have performance data, but it's dirty and doesn't follow any particular statistical distribution.

A technique called the jackknife lets us estimate these parameters.

We successively recompute a function (such as mean) over subsets of a sample, each time with a sliding window cut out.

For a w-width window and k samples, the jackknife has a cost of O((k - w)2).

jackknife :: ([a] -> b) -> [a] -> [b]

jackknife f = map f . resamples 500It's easy to write a resampling function using familiar building blocks.

resamples :: Int -> [a] -> [[a]]

resamples k xs =

take (length xs - k) $

zipWith (++) (inits xs) (map (drop k) (tails xs))Our function resamples a list with a window size of k.

>> resamples 2 [0..5]

[[ 2,3,4,5],

[0, 3,4,5],

[0,1, 4,5],

[0,1,2, 5]]The nice thing about the jackknife is that each element of the result list is independent, so it's an "embarrassingly parallel" problem.

The monad-par package is very helpful in making this trivial to parallelize.

import Control.Monad.Par (runPar, parMap)

jackknifeP f = runPar . parMap f . resamples 500Let's try it out!

Noise!

import System.Random.Mersenne

crud = zipWith (\x a -> sin (x / 300)**2 + a) [0..]

main = do

(xs,ys) <- splitAt 1500 . take 6000 <$> (randoms =<< getStdGen)

let rs = crud xs ++ ys

putStrLn $ "sample mean: " ++ show (mean rs)

let j = jackknifeP mean rs

putStrLn $ "jack mean min: " ++ show (minimum j)

putStrLn $ "jack mean max: " ++ show (maximum j)We have to remember to build using both -threaded and -rtsopts.

ghc -O --make -rtsopts -threaded Jackknife

-threaded gives us the threaded runtime.

We'll need -rtsopts to tell the RTS how many cores to use. It will default to just one if we do not specify otherwise.

To use e.g. 3 cores:

./Jackknife +RTS -N3

If we want to use all cores:

./Jackknife +RTS -N

Meanwhile, par and the monad-par packages are not the only ways to achieve parallel speedups in Haskell.

There are other more "researchy" projects under way:

For multicore machines: Data Parallel Haskell

For GPU computing: the accelerate package

DPH is the name of a research project to support nested data parallelism under GHC on multicore CPUs.

Nested data parallelism extends the programming model of flat data parallelism, as known from modern Fortran dialects, to:

Irregular parallel computations (such as divide-and-conquer algorithms) and

Irregular data structures (such as sparse matrices and tree structures).

This project is still a work in progress, and is quite technically intricate.

The accelerate library defines an embedded language for regular, multi-dimensional array computations with multiple backends to facilitate high-performance implementations.

Currently, there are two backends:

An interpreter that serves as a reference implementation of the intended semantics of the language.

A CUDA backend generating code for CUDA-capable NVIDIA GPUs.

You'll sometimes hear uninformed people claim that functional programming is some kind of magic pixie dust for parallel programming.

This is not really true.

Certainly, aspects of the programming model make incidental parts of the problem easier.

However, tricky considerations like data dependencies, memory placement, and thread scheduling have every bit as insidious an effect on parallel performance in Haskell as in C.

Parallel programming is a subtle art no matter which language you do it in.